Are your hiring strategies truly tapping into the promise of AI, or are they stumbling over the hidden pitfalls of AI-powered recruitment?

By the end of 2025, approximately 60% of organizations are expected to be using AI for end-to-end recruitment processes.

Yet, many still encounter issues related to bias, transparency, and human connection that undermine effectiveness.

That’s why this guide delves into the 10 challenges of AI in recruitment, blending a critical examination of AI’s limitations with practical approaches for maintaining human judgment and fairness.

Let’s get straight into the obstacles and how to navigate them.

Key takeaways

- AI recruiting can amplify bias if unchecked.

Skewed data can cause unfair candidate screening and legal risks. Regular audits and human oversight reduce this problem.

- Transparency is crucial for establishing trust and ensuring compliance.

Black-box AI decisions frustrate candidates and recruiters. Explainable tools and open feedback build confidence and meet legal standards.

- Over-automation risks missing top talent.

Relying too heavily on AI can overlook strong candidates and harm diversity. Human review ensures better hiring outcomes.

- Data privacy and regulations must be prioritized.

AI hiring tools handle sensitive data, so breaches and non-compliance can be costly. Strong security and awareness of global rules are key.

- Cohort AI helps solve these challenges.

It combines explainable shortlists, bias monitoring, privacy safeguards, and human oversight for faster, fairer, and compliant hiring.

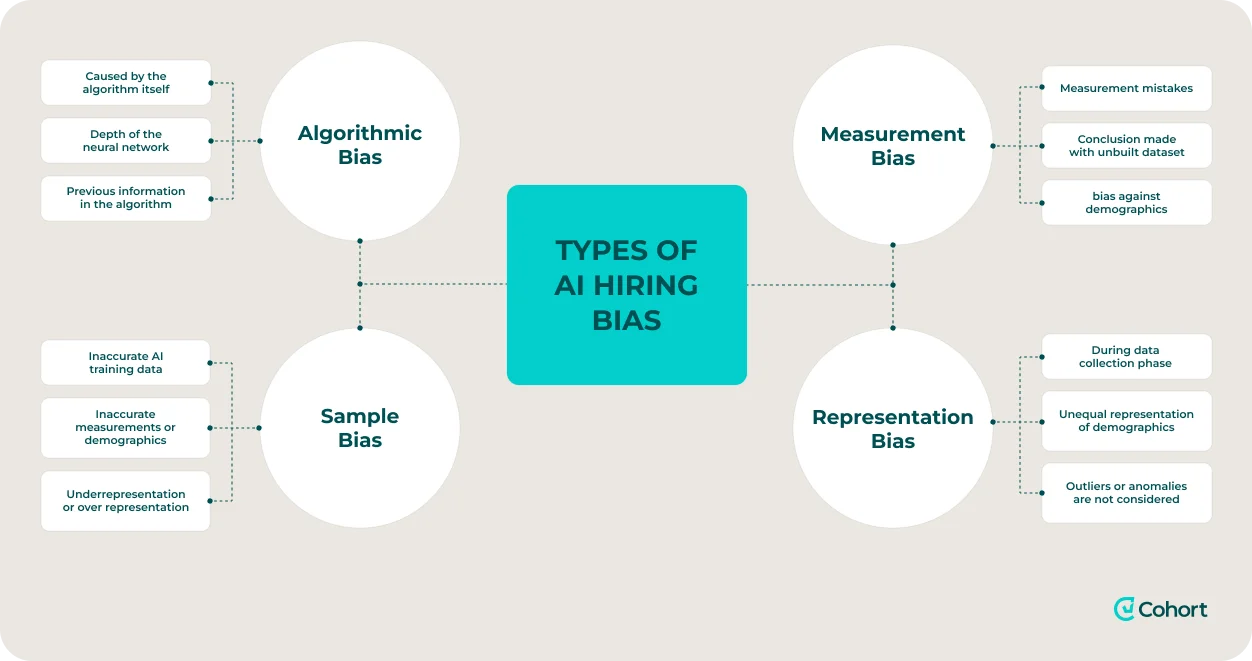

1. Bias in AI recruiting algorithms

Even the most advanced AI tools can unintentionally inherit human biases.

When trained on skewed data, systems may favor certain groups and overlook others.

Amazon saw this when its AI résumé screener downgraded applications with the word “women’s” because the model was trained on résumés from a male-dominated industry.

A 2024 study also found that AI ranked white-sounding names higher 85% of the time, while female names were favored in only 11% of cases.

💡 Why it matters:

Bias in AI is not only a technical issue, but also a business and legal risk.

Discriminatory algorithms can lead to homogenous teams, missed talent, reputational damage, and even lawsuits under anti-discrimination laws.

✅ How to fix it:

- Audit regularly: Conduct bias audits of your AI automation tools to detect disparate outcomes (some cities, like New York, already mandate this by law).

- Improve training data: Retrain models on balanced, diverse data to reduce skew.

- Vet vendors carefully: Demand proof that AI providers test for bias before deployment.

- Keep humans in the loop: Let AI shortlist, but ensure recruiters review recommendations and make final decisions.

📊 Proof in action:

The U.S. Department of Labor has issued an inclusive hiring framework urging employers to audit and monitor AI tools.

Many HR teams now integrate ongoing bias checks into their recruitment process, making fairness a core design principle.

💡 Pro tip

Cohort lets you tailor matching logic to prioritize diversity, focusing on potential and patterns rather than traditional pedigree.

It aligns the tech with your team’s DEI mission from the start.

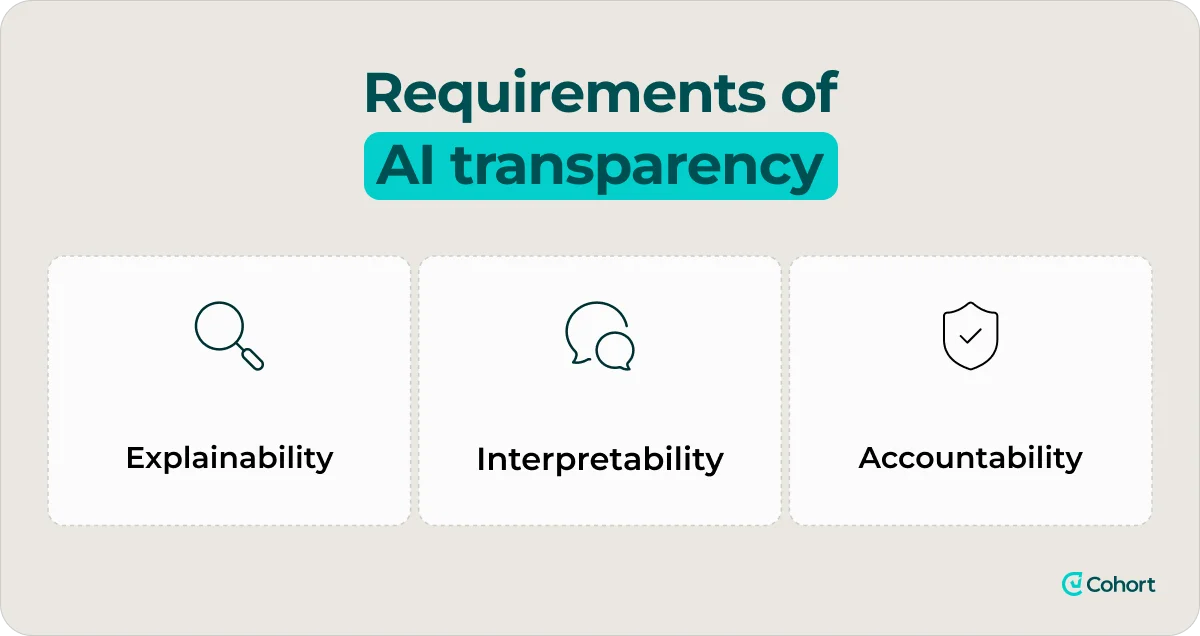

2. Lack of transparency in AI hiring decisions

Many AI hiring tools operate like “black boxes,” producing rankings or rejections without providing any explanation.

Recruiters often don’t know why a candidate was screened out, and candidates are left in the dark as well.

This lack of clarity erodes trust and makes it impossible to challenge or improve decisions.

💡 Why it matters:

Opaque algorithms damage confidence in the hiring process.

Candidates may view decisions as arbitrary or unfair, while recruiters hesitate to trust results they can’t interpret.

In some regions, such as New York, transparency is now a legal requirement.

✅ How to fix it:

- Choose explainable AI platforms that show why a candidate was ranked (e.g., “matched 90% of required skills”).

- Work with vendors to understand what factors their algorithm weighs most.

- Share clear, honest feedback with candidates instead of hiding behind “the system.”

- Train HR teams to critically evaluate AI outputs rather than accepting them at face value.

📊 Proof in action:

New York City’s Local Law 144 requires companies to notify candidates when AI is used and mandates annual bias audits, signaling a global shift toward explainability in AI hiring.

3. Over-reliance on automation in recruitment

Automation can streamline repetitive tasks, such as scheduling and résumé scanning, but however, allowing AI to make hiring decisions unchecked is a risk.

Some firms expect AI to handle the entire hiring process end-to-end within a year.

However, without human oversight, automation often misfires, rejecting strong candidates or amplifying existing biases.

💡 Why it matters:

Too much automation leads to missed talent, legal exposure, and impersonal hiring experiences.

Over-reliance also weakens diversity by filtering out candidates with unconventional career paths or gaps in their résumés.

✅ How to fix it:

- Use AI for supporting tasks (such as scheduling and screening), but require human review before rejection.

- Spot-check patterns in AI outcomes to ensure diversity isn’t being squeezed out.

- Build an “AI second opinion” system: AI suggests, but a recruiter approves.

- Train teams on the limits of automation so they don’t overtrust the system.

📊 Proof in action:

In 2023, the EEOC settled its first AI hiring discrimination case for $365,000 after an automated tool excluded older applicants, showing how unchecked automation can lead to costly compliance failures.

💡 Pro tip

Cohort’s Eva the Evaluator AI agent supports async, text-based screening for early rounds, removing voice and appearance from the equation.

Everyone gets the same structured chance to shine.

4. Data privacy concerns in AI recruiting

AI hiring platforms collect vast amounts of candidate data, ranging from résumés to video interviews, which raises major privacy concerns.

In 2025, a breach of a McDonald’s AI hiring chatbot exposed the personal details of millions of applicants due to a weak password, highlighting the fragility of these systems.

💡 Why it matters:

Data breaches erode candidate trust and expose employers to fines under laws such as the GDPR and CCPA.

Additionally, mishandling personal data can be a reputational disaster.

✅ How to fix it:

- Work only with vendors that follow strict security standards (encryption, SOC2, ISO 27001).

- Collect only the data you need, and store it for only as long as necessary.

- Be transparent with candidates about how their information is stored and used.

- Regularly audit your systems and require vendors to do the same.

📊 Proof in action:

After the McHire breach, regulators and candidates alike doubled down on demanding security guarantees, highlighting the need for proactive safeguards rather than reactive fixes.

5. AI recruitment compliance with global regulations

The regulatory landscape around AI in hiring is tightening fast.

From New York’s mandatory bias audits to the EU AI Act classifying recruitment algorithms as “high risk,” companies must navigate a growing patchwork of rules.

Failure to comply can mean multi-million-dollar fines or lawsuits.

💡 Why it matters:

Non-compliance is costly, as it can trigger lawsuits, damage to the brand, and heavy penalties (up to 7% of global revenue under the EU AI Act).

So today, compliance is a business imperative.

✅ How to fix it:

- Stay up to date with local and global regulations (e.g., NYC audits, EU AI Act).

- Require vendors to provide documentation and risk assessments.

- Conduct internal bias audits even when not legally required.

- Ensure that humans can override AI decisions at every stage of the hiring process.

- Train HR staff to understand and apply compliance obligations.

📊 Proof in action:

Workday is currently facing a class-action lawsuit alleging that its AI screening discriminates by age and race, demonstrating that vendors and employers alike are now being held accountable under employment law.

💡 Pro Tip:

Cohort AI remains compliant worldwide by utilizing Standard Contractual Clauses for international data transfers and adhering to privacy rights under the GDPR, CCPA, and other relevant state laws.

Clients can be confident that candidate data is handled with safeguards that meet both U.S. and EU standards.

6. Misinterpretation of candidate soft skills by AI tools

AI still struggles with nuance, as it can read keywords and scores, but subtle human cues, such as empathy or leadership style, are easily misjudged.

Tools that infer traits from video or voice often misread accents, neurodivergent communication styles, or cultural nuances, leading to inaccurate results.

💡 Why it matters:

Misreads create false negatives and false positives.

Great collaborators can be filtered out, while smooth performers in one-way video get overrated.

✅ How to fix it:

- Utilize human-led behavioral interviews, group exercises, and reference checks to assess soft skills.

- Let AI do transcripts and summaries, then have recruiters interpret answers in context.

- Validate any soft-skill assessments against on-the-job performance for your roles.

- Offer opt-outs or re-takes when tech glitches or anxiety clearly skew results.

📊 Proof in action:

Major vendors have retired facial-expression scoring due to concerns over accuracy and fairness.

Many teams now use AI solely to assist with note-taking, while humans assess soft skills.

💡 Pro tip

Cohort matches candidates to roles based on skills, behavior, and domain fit, rather than just job title or keyword alignment.

You get better signals and stronger day-one fits.

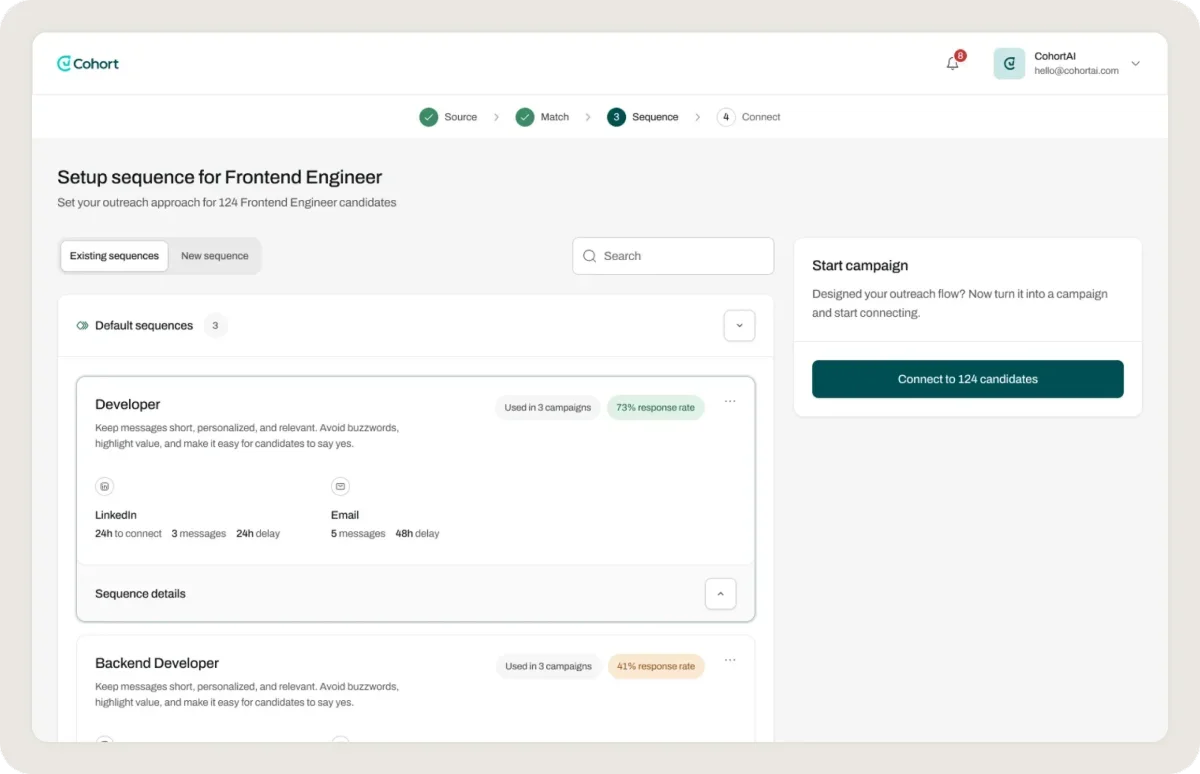

7. Personalization challenges in AI-driven outreach recruiting

AI can draft at scale, but genuine personalization requires insight into motivations, timing, and a good fit.

Candidates quickly spot template emails with token placeholders.

💡 Why it matters:

Generic outreach can depress response rates and harm brand perception.

True personalization consistently outperforms batch messages.

✅ How to fix it:

- Use AI to research and summarize, then have recruiters add specific hooks about projects, achievements, or career arcs.

- Segment by persona and craft focused templates for each segment instead of a single catch-all.

- Create a concise voice guide to ensure AI drafts align with your tone.

- A/B test subject lines and openings, and track reply rates to refine prompts.

📊 Proof in action:

Teams that blend AI drafting with human editing receive higher response rates than fully automated blasts, especially in competitive tech roles.

💡 Pro tip:

Cohort’s Charlie the Closer AI agent handles candidate nudging, Q&A, and post-interview engagement automatically, while maintaining personalization.

It prevents drop-offs and builds trust across every touchpoint.

8. Losing the human touch in AI-powered hiring processes

Candidates want to feel seen, but a journey of chatbots, auto-screens, and one-way videos can feel cold and discouraging.

💡 Why it matters:

Impersonal processes reduce completion and acceptance rates, so candidates often prefer human interaction for key stages of the process.

✅ How to fix it:

- Keep humans in early touchpoints: brief recruiter calls, personal check-ins, named contacts.

- Use AI for speed, not for relationship building.

- Explain why you use AI and how it benefits the candidate.

- Offer a direct help path when tech or accessibility issues arise.

📊 Proof in action:

Firms that pair automation with a candidate concierge or early human screening report stronger satisfaction scores and lower drop-off rates.

💡 Pro tip

Cohort AI works best with humans in the loop: let it surface a tight shortlist, then have recruiters validate, annotate, and re-rank against your culture and context.

Set a rule that no candidate is rejected without human review and feed those decisions back into Cohort AI each cycle to raise precision, reduce bias, and protect quality.

9. Inaccurate candidate-job matching with AI

Algorithms over-weight credentials, miss transferable skills, or reproduce historical patterns.

Strong candidates get hidden; odd matches surface.

💡 Why it matters:

Bad matching wastes recruiter time and causes talent loss when good applicants are auto-rejected.

✅ How to fix it:

- Calibrate models to success signals for your roles, not generic proxies.

- Keep humans reviewing borderline or low-ranked profiles for hidden gems.

- Feed outcomes back to the model so it learns from hires and performance.

- Refresh job descriptions and skills taxonomies to reflect reality.

📊 Proof in action:

Teams that add a human review lane for low-ranked profiles uncover viable candidates and improve model precision over time.

💡 Pro tip

Cohort AI helps you build a fairer hiring funnel from the start by using agents like Sally the Scout to surface high-signal candidates based on skills.

Additionally, the talent graph and shortlisting system ensure better matches, consistent rankings, and decisions that align with DEI.

Result? You get a ranked shortlist of the top 3 candidates, ready for review.

10. Ethical issues in automated recruitment decision-making

Automation raises questions about consent, explainability, and accountability, and candidates deserve to know when and how AI is used.

💡 Why it matters:

Ethical lapses can lead to legal exposure, reputational harm, and internal discomfort among recruiters who are asked to defend opaque decisions.

✅ How to fix it:

- Develop an AI ethics policy for recruitment that prioritizes transparency, bias testing, human override, and data minimization.

- Log rationales for significant decisions and maintain audit trails.

- Offer accommodations and alternate assessments where appropriate.

- Establish governance with HR, legal, IT, and diverse stakeholders to review outcomes.

📊 Proof in action:

Companies with documented governance and auditability processes handle candidate challenges more efficiently and maintain trust with regulators and talent.

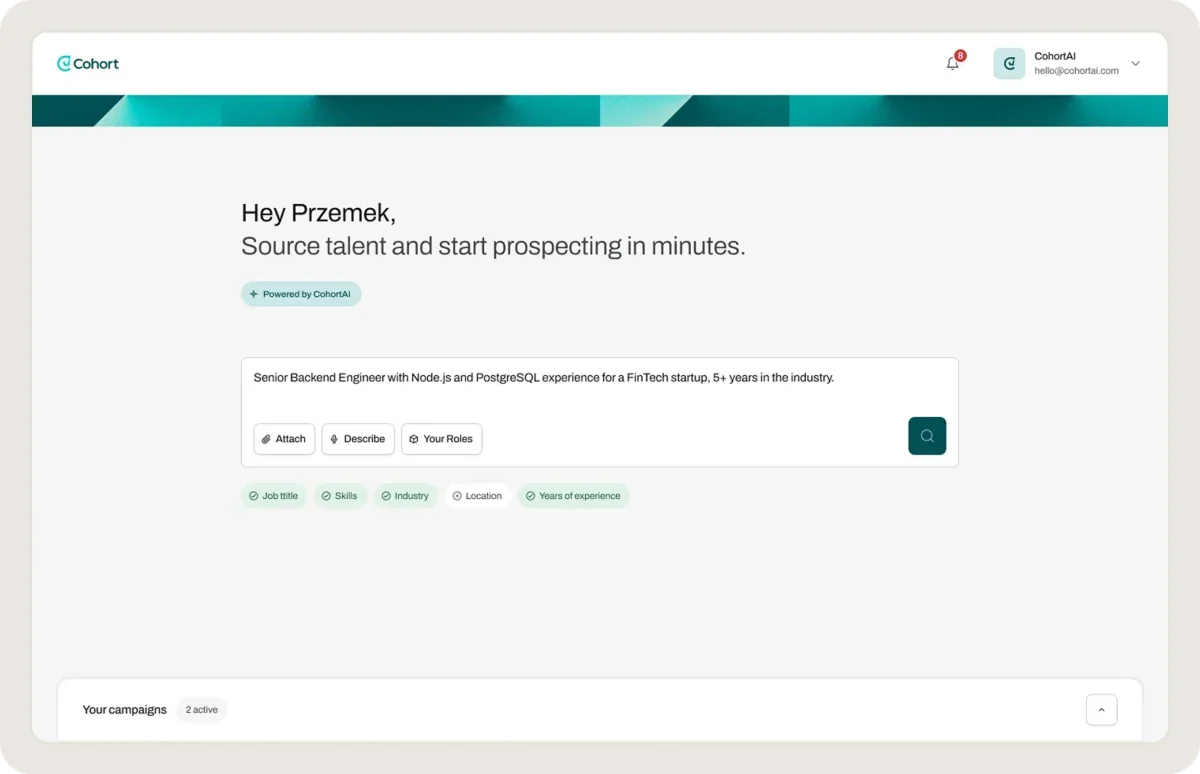

Cohort AI: Solving the big challenges of AI in recruitment with human oversight

Cohort AI is built by engineers and recruiters to address the real challenges of AI hiring, including bias, black-box decisions, brittle personalization, and data risk.

It keeps humans in the loop while its generative AI interprets your role and company context, produces explainable shortlists, and automates outreach responsibly.

Here is how Cohort AI addresses the toughest AI hiring challenges:

- Explainable shortlists: The Talent Graph shows why each candidate matches and flags data gaps recruiters should review.

- Human in the loop: Sally the Scout surfaces matches, and recruiters validate, annotate, and approve before any decision is finalized.

- Bias checks and fairness monitoring: Built-in reporting supports regular audits and highlights disparate impact so you can correct fast.

- Privacy and security by design: Data minimization, access controls, and regional storage options protect candidate information.

- Personalized outreach with guardrails: Pete the Prospector drafts role-specific messages that recruiters edit for tone and accuracy.

- Candidate-first engagement: Charlie the Closer answers common questions and routes nuanced issues to a human quickly.

- Seamless workflow: Open integrations keep everything in your ATS so hiring teams work from a single source of truth.

Struggling with explainability gaps, bias concerns, data privacy questions, or candidate distrust that slows your funnel.

👉 Book your free Cohort AI demo to see how human-in-the-loop safeguards tackle bias, improve transparency, and keep AI hiring compliant.