Reducing bias in hiring is crucial, especially since 57% of companies spend over 40% of their HR budget on finding talent.

It seems that fair and inclusive hiring is more important now than ever.

The good news? You can cut hiring bias by using structured methods.

These methods help you assess candidates more fairly and objectively.

This guide walks you through 15 strategies on how to reduce bias in the hiring process, helping you build a stronger, more diverse team.

Let’s get started!

What is hiring bias?

Hiring bias happens when personal opinions or assumptions influence how candidates are judged during hiring.

It can show up in subtle ways, like:

- favoring someone who went to the same university,

- making assumptions based on a name,

- or rating confidence over competence.

The result? Great candidates get overlooked for the wrong reasons.

Remember that bias doesn’t always come from bad intentions, but it can lead to homogeneous teams and missed opportunities.

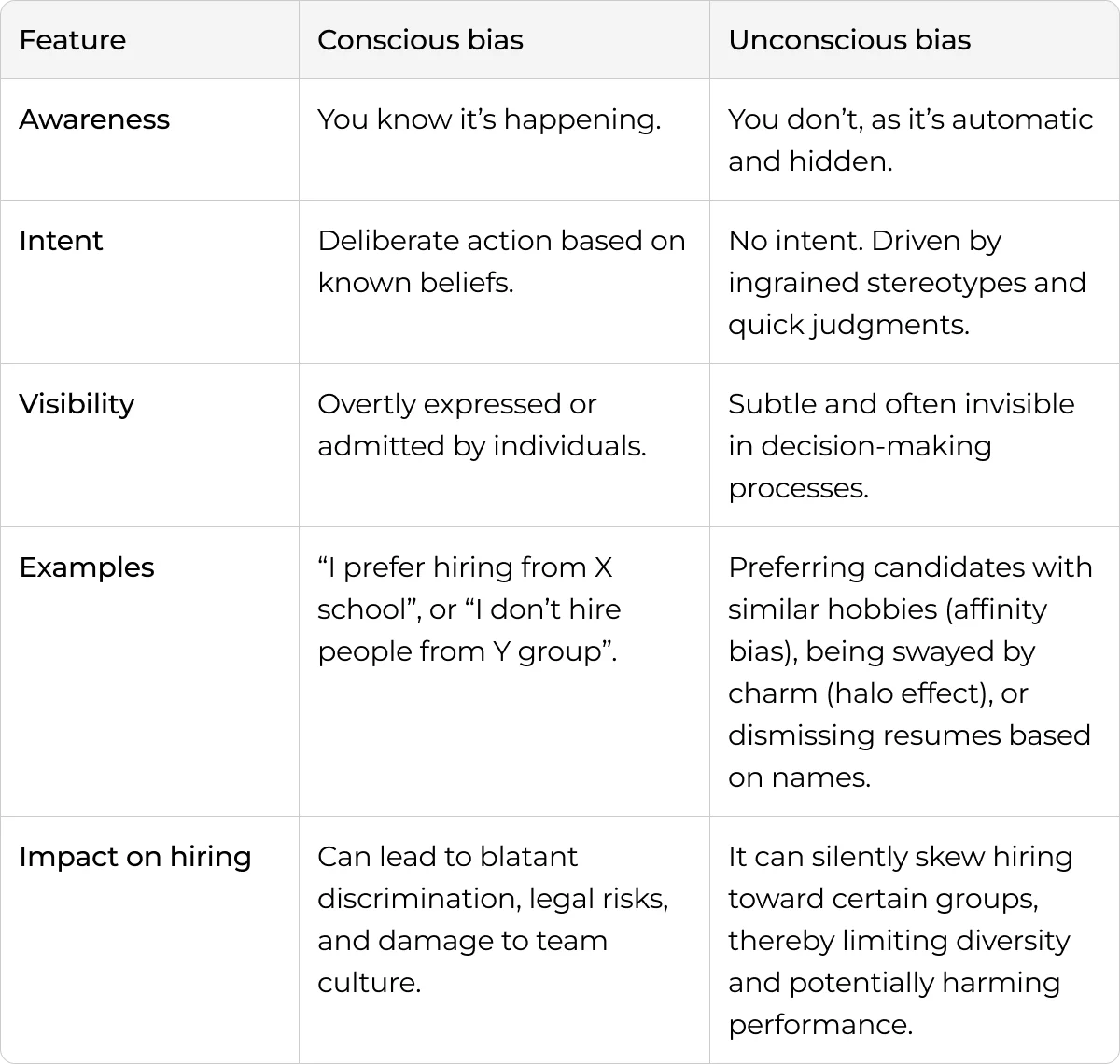

Conscious vs. unconscious bias

Conscious bias occurs when a person intentionally favors or discriminates against someone or a group.

For example, this might mean preferring candidates from a specific background or school.

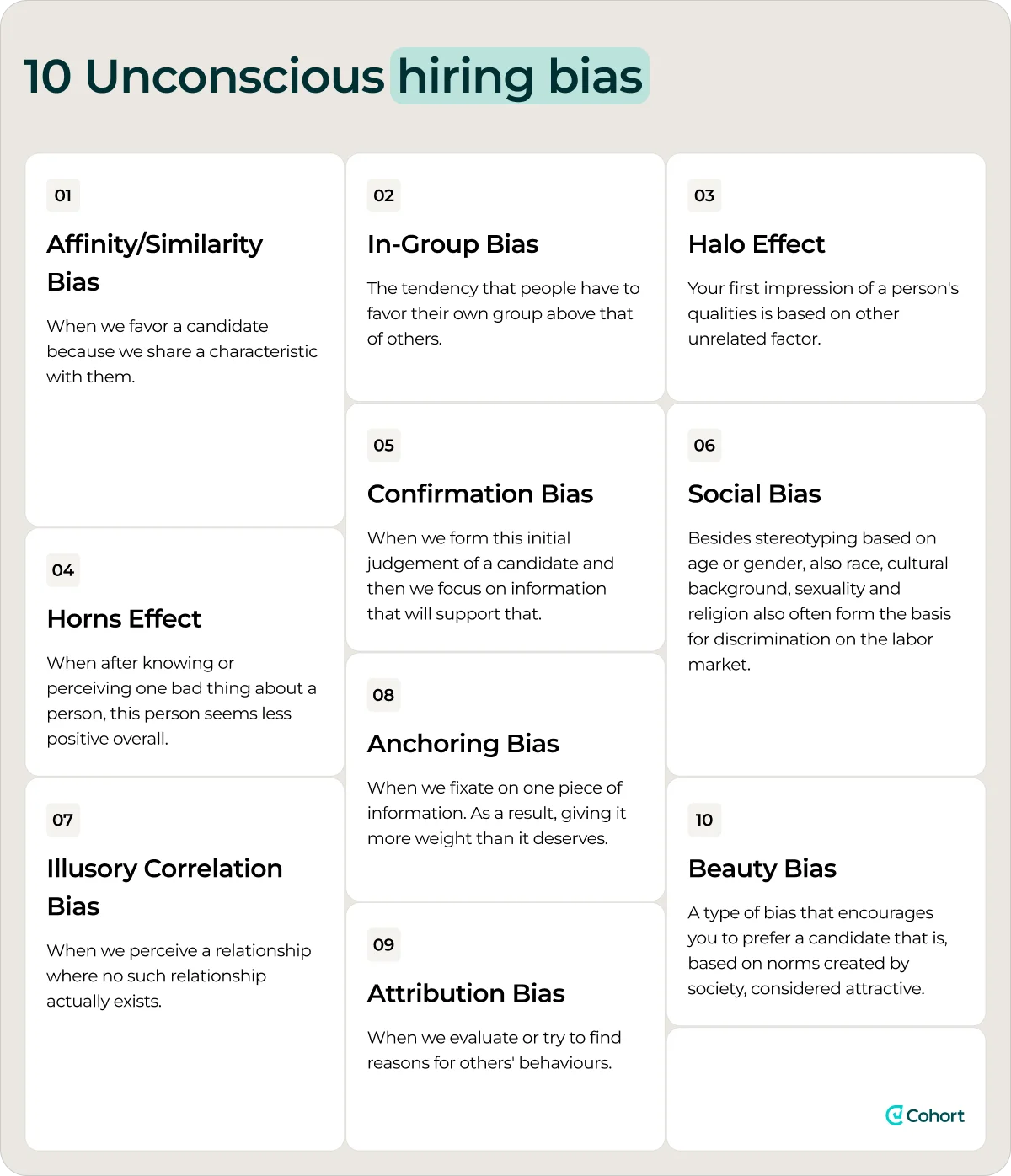

On the other hand, unconscious bias happens without us realizing it.

Our brains often make quick assumptions based on stereotypes or past experiences.

For example, we might think someone is less qualified because of their name, accent, or age.

What triggers hiring bias?

Hiring bias occurs when we make assumptions about candidates.

Instead of looking at their actual qualifications, we let our assumptions guide us.

Here are some common triggers:

- Unconscious or implicit bias: Favoring candidates who seem familiar (same background, gender, school) without realizing it, which reinforces sameness and limits diversity.

- Status quo bias: Defaulting to hiring the same type of candidate to avoid change, which maintains homogeneity and stifles innovation.

- Anchor bias: Letting one early detail, like a resume gap or past job title, overly influence how a candidate is assessed.

- Perceived manager preferences: Recruiters filter candidates based on what they believe the hiring manager wants, often unintentionally reinforcing bias.

- Stereotypes and myths: Assuming, for example, that disabled candidates can’t perform certain roles or that accents signal lower competence.

- Salary history questions: Basing offers on previous salaries can perpetuate past pay discrimination, especially for women and marginalized groups.

- Age and appearance bias: Judging candidates unfairly based on their age, looks, or accent rather than their skills or experience.

15 Strategies to remove bias from the recruitment process

1. Adopt AI-powered blind screening tools

AI blind screening takes out personal details like names, schools, and graduation years from CVs.

This helps recruiters focus on skills and reduces unconscious bias.

Additionally, these tools have matured to do more than just mask identity, as nowadays they score experience, skills, and relevance without relying on prestige signals.

🧠 Why is this essential?

- Names and universities can trigger subconscious assumptions. Removing those variables leads to more diverse and qualified shortlists.

🚀 How it’s done:

- Use AI tools that censor personal info and structure resumes for fair comparison.

- Pair blind CVs with automated skills assessments for objectivity.

- Feed anonymized applications into your ATS for consistent screening.

✅ Fun fact:

Blind auditions in U.S. symphony orchestras increased the probability of women advancing by 50%, boosted their chances of winning final rounds severalfold, and account for 30–55% of the rise in female new hires and 25–46% of the overall increase in female orchestra members since 1970.

💡 Pro tip

Cohort AI’s Sally the Scout AI agent sources high-signal profiles across GitHub, LinkedIn, and more, so you review only skills, not surface-level traits.

It ensures your top-of-funnel stays bias-free from the start.

2. Use structured interviews with digital support

Structured interviews ensure every candidate is asked the same questions and evaluated on the same rubric.

AI can now support consistency by delivering prompts, scoring responses, and tracking feedback to ensure consistency.

🧠 Why is this essential?

- Unstructured interviews rely on familiarity and intuition, both of which can be hotbeds for bias.

🚀 How it’s done:

- Use standardized questions and rating scales.

- Automate delivery using video tools or chat-based AI.

- Train panels to evaluate using shared criteria, rather than relying on instinct.

💡 Pro tip

Cohort’s Eva the Evaluator AI agent runs async, role-specific interviews and scores responses based on fit signals you define.

You replace hours of first-round calls with fast, fair assessments.

3. Use AI tools that understand resumes, not just keywords

AI-powered parsers utilize NLP to comprehend the context of job experience.

This helps find candidates with the right skills, even if their resumes aren't in a standard format.

🧠 Why is this essential?

- Overreliance on keyword matches penalizes candidates from non-linear backgrounds.

🚀 How it’s done:

- Switch to NLP-based resume parsing tools.

- Rank based on skill relevance and behavioral indicators.

- Continuously refine algorithms based on top-performing traits.

💡Pro tip

Cohort’s talent graph connects skills, domains, and public signals across platforms.

This means smarter matches based on how candidates work, not just where they’ve worked.

4. Analyze job descriptions with bias detection tools

Job descriptions are your first signal, and AI tools can flag exclusionary language and rewrite it in a way that invites a broader range of applicants.

🧠 Why is this essential?

- Biased wording deters qualified talent before they even apply.

🚀 How it’s done:

- Scan listings for gendered, ableist, or exclusive phrasing.

- Use AI suggestions to rewrite content in inclusive language.

- Review how different versions affect applicant diversity.

5. Audit AI hiring tools for fairness

Rather than relying on guesswork, use bias checks built into your tech stack to monitor outcomes across demographics.

🧠 Why is this essential?

- Regular audits catch drift before it affects candidate experience.

🚀 How it’s done:

- Analyze model output across race, gender, and age brackets.

- Use benchmarks like demographic parity or the four-fifths rule.

- Adjust scoring models to maintain balanced outcomes over time.

💡Pro tip

Cohort’s shortlisting system ensures fairness through AI ranking plus human review.

It’s built to avoid feedback loops and maintain consistency across demographics.

6. Combine AI ranking with human judgment

AI can quickly surface top candidates using skill-based indicators, but final decisions are best made when combined with thoughtful human review.

This hybrid model blends speed and objectivity with context and nuance.

🧠 Why is this essential?

- AI is fast and consistent, but it can miss human context. Human oversight prevents unfair decisions and catches what algorithms overlook.

🚀 How it’s done:

- Let AI surface top candidates based on skills, behavioral traits, and alignment with the role.

- Have hiring managers validate shortlists with a structured review.

- Use feedback to improve the system’s rankings over time.

💡Pro tip

Cohort delivers your top 3 candidates, already ranked for skill and fit, so your team can skip manual sorting and go straight to evaluating impact.

It’s fast, structured, and built to balance AI insights with human oversight.

7. Customize AI to support your DEI goals

Tailor algorithms to align with your company’s values, whether that means over-indexing on potential, surfacing underrepresented talent, or diversifying pipelines.

🧠 Why is this essential?

- If your tech doesn’t reflect your goals, it’s working against you.

🚀 How it’s done:

- Adjust weighting criteria in your matching engine to align with DEI priorities.

- Feed in examples of successful hires from diverse pathways.

- Evaluate progress against internal benchmarks.

💡 Pro tip

Cohort lets you tailor matching logic to prioritize diversity, focusing on potential and patterns rather than traditional pedigree.

It aligns the tech with your team’s DEI mission from the start.

8. Use AI to remove gendered language from job ads

Rather than guess what’s biased, AI can automatically detect and replace gender-coded words and test for readability across diverse groups.

🧠 Why is this essential?

- Inclusive language widens your talent pool without sacrificing clarity.

🚀 How it’s done:

- Scan job posts for subtle bias signals.

- Auto-rewrite sections using neutral phrasing that appeals across demographics.

- Measure changes in applicant mix after updates.

9. Deploy AI chatbots for inclusive candidate engagement

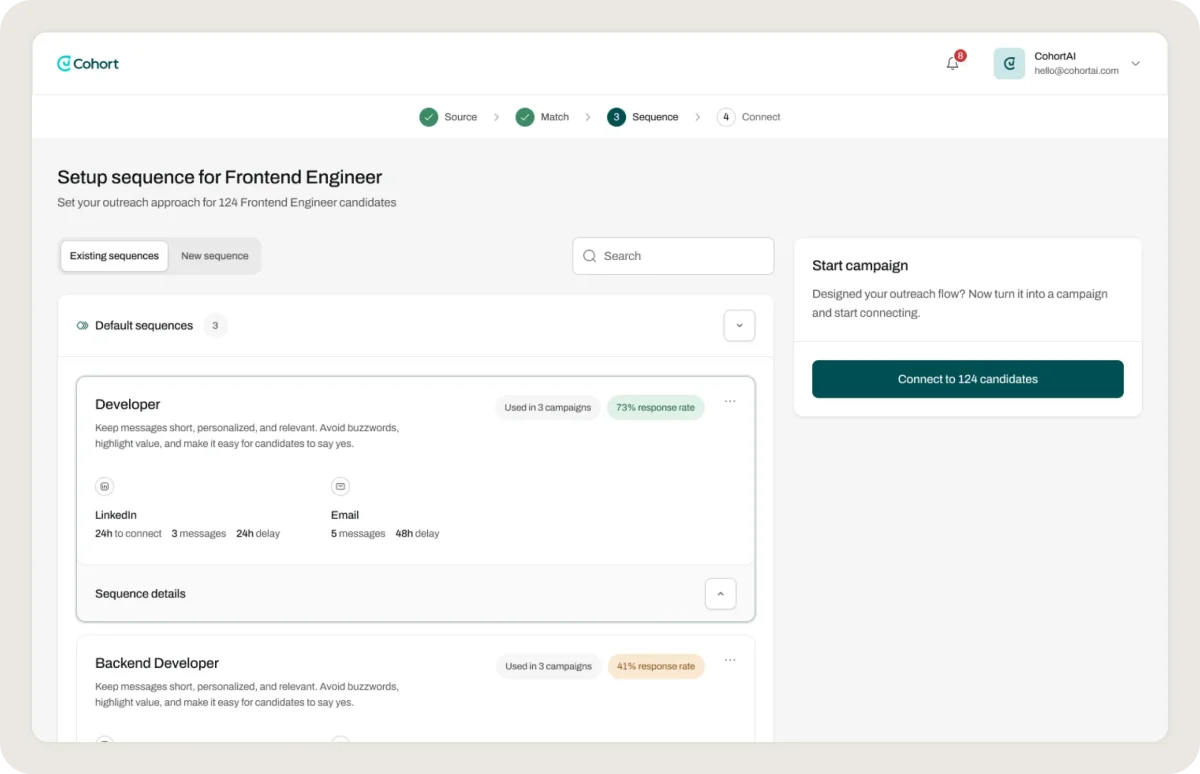

AI-powered messaging agents help answer candidate questions, nudge for updates, and provide guidance at each stage, keeping communication consistent and bias-free.

🧠 Why is this essential?

- Everyone receives clear information, regardless of their familiarity with hiring norms.

🚀 How it’s done:

- Let AI handle FAQ, scheduling nudges, and check-ins.

- Maintain real-time communication across all channels and devices.

- Utilize behavioral data to provide personalized support without bias.

✅ Fun fact:

McDonald’s AI chatbot led to a 60% faster hiring process and a 95% candidate satisfaction rate, while ensuring fairness at every stage.

💡 Pro tip:

Cohort’s Charlie the Closer AI agent handles candidate nudging, Q&A, and post-interview engagement automatically.

It prevents drop-offs and builds trust across every touchpoint.

10. Use skills-based assessments with digital platforms

Simulations and challenges offer candidates the opportunity to demonstrate their capabilities, not just their past accomplishments.

🧠 Why is this essential?

- Reduces reliance on background, boosts real-world performance visibility.

🚀 How it’s done:

- Assign coding tasks, design projects, or scenario-based challenges.

- Score automatically using pre-set criteria.

- Integrate with AI-powered evaluation tools that align with success profiles.

✅ Fun fact:

Unilever used digital assessments (like AI-analyzed games and video interviews) and saw higher diversity among entry-level hires, especially those from non-elite schools.

💡 Pro tip

Cohort’s Eva the Evaluator AI agent assesses technical and behavioral fit through asynchronous tasks, providing you with structured signals in hours, not days.

You get fit scores and red flags fast.

11. Conduct ongoing recruitment data analysis

With AI dashboards, you can track the behavior of your hiring funnel and identify where certain groups drop out or are disproportionately filtered.

🧠 Why is this essential?

- It turns hunches about bias into clear, actionable data.

🚀 How it’s done:

- Break down pass-through rates by demographic segment.

- Use funnel metrics to identify high-friction points in your sales process.

- Tie changes to intervention tactics and measure improvements.

💡 Pro tip

Cohort’s system tracks how candidates move through the funnel and gives insight into who advances and why.

You can identify and resolve drop-off patterns in real-time.

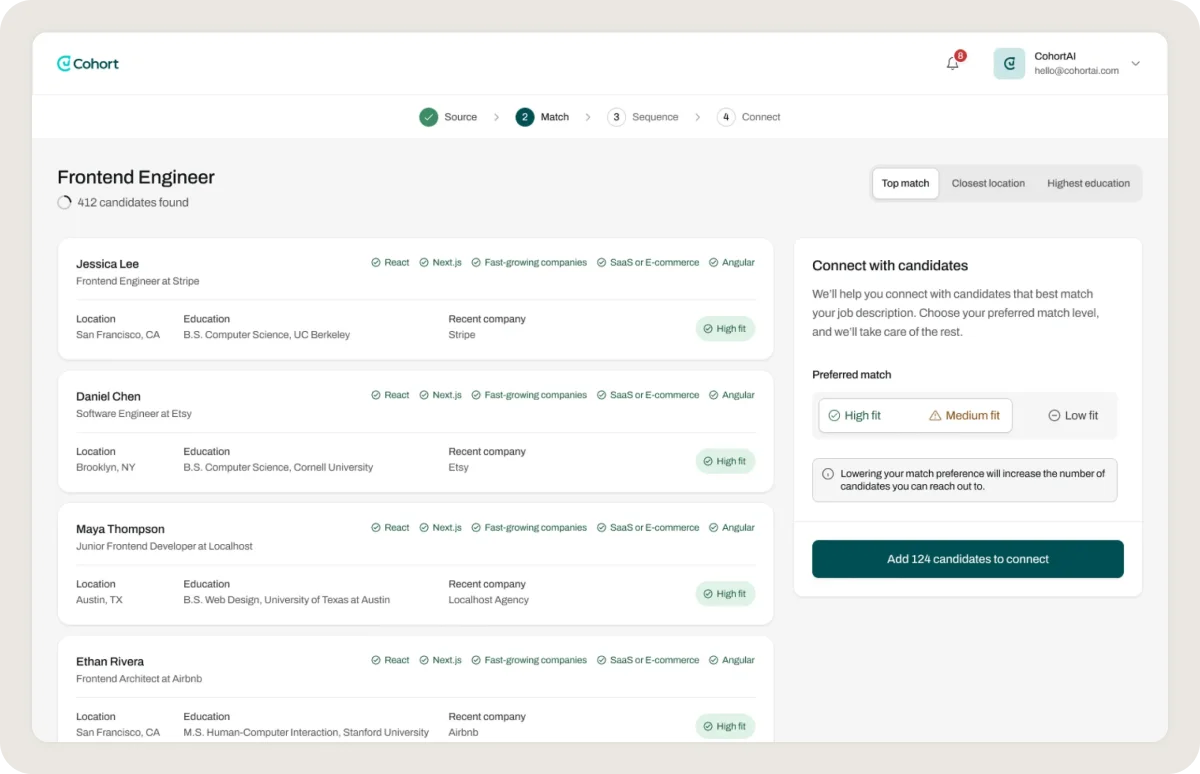

12. Integrate AI-powered candidate matching

AI helps surface matches based on deeper indicators, such as domain knowledge, behavioral traits, and team compatibility, not just keywords or years of experience.

🧠 Why is this essential?

- It broadens your search beyond conventional signals.

🚀 How it’s done:

- Match candidates based on skill clusters, not just job titles.

- Utilize graph-based intelligence to identify lateral or hidden connections.

- Validate recommendations with performance markers post-hire.

✅ Fun fact:

IBM’s internal AI helped increase promotion rates for underrepresented employees by identifying talent that managers had overlooked.

💡 Pro tip

Cohort matches candidates to roles based on skills, behavior, and domain fit, rather than just job title or keyword alignment.

You get better signals and stronger day-one fits.

13. Enable smart scheduling tools for interviews

AI schedulers eliminate human guesswork and reduce dropout by streamlining the process for both parties, making it easier and faster.

🧠 Why is this essential?

- Fewer delays = less friction, more fairness.

🚀 How it’s done:

- Let candidates self-select time slots.

- Auto-distribute prep materials and confirmations.

- Adjust availability based on the diversity of the interview panel.

14. Use text-based screening platforms

Text-based interviews reduce pressure and eliminate bias associated with voice, accent, or facial expressions.

🧠 Why is this essential?

- It levels the field for candidates who may feel excluded by traditional formats.

🚀 How it’s done:

- Use text-based prompts for early screening rounds.

- Apply language models to assess response quality.

- Keep responses standardized for easier comparison.

💡 Pro tip

Cohort’s Eva the Evaluator AI agent supports async, text-based screening for early rounds, removing voice and appearance from the equation.

Everyone gets the same structured chance to shine.

15. Implement AI-based background checks and references

Tech-enabled reference and background checks help eliminate informal gatekeeping and streamline the validation process.

🧠 Why is this essential?

- Automated systems apply the same standard to every candidate.

🚀 How it’s done:

- Use structured reference forms powered by AI summarization.

- Scan public records and data sources for signals, not assumptions.

- Keep checks consistent across candidates.

💡 Pro tip

Cohort’s Charlie the Closer AI agent can automate reference checks and logistics, ensuring consistency across all candidates.

It handles sensitive details without introducing bias.

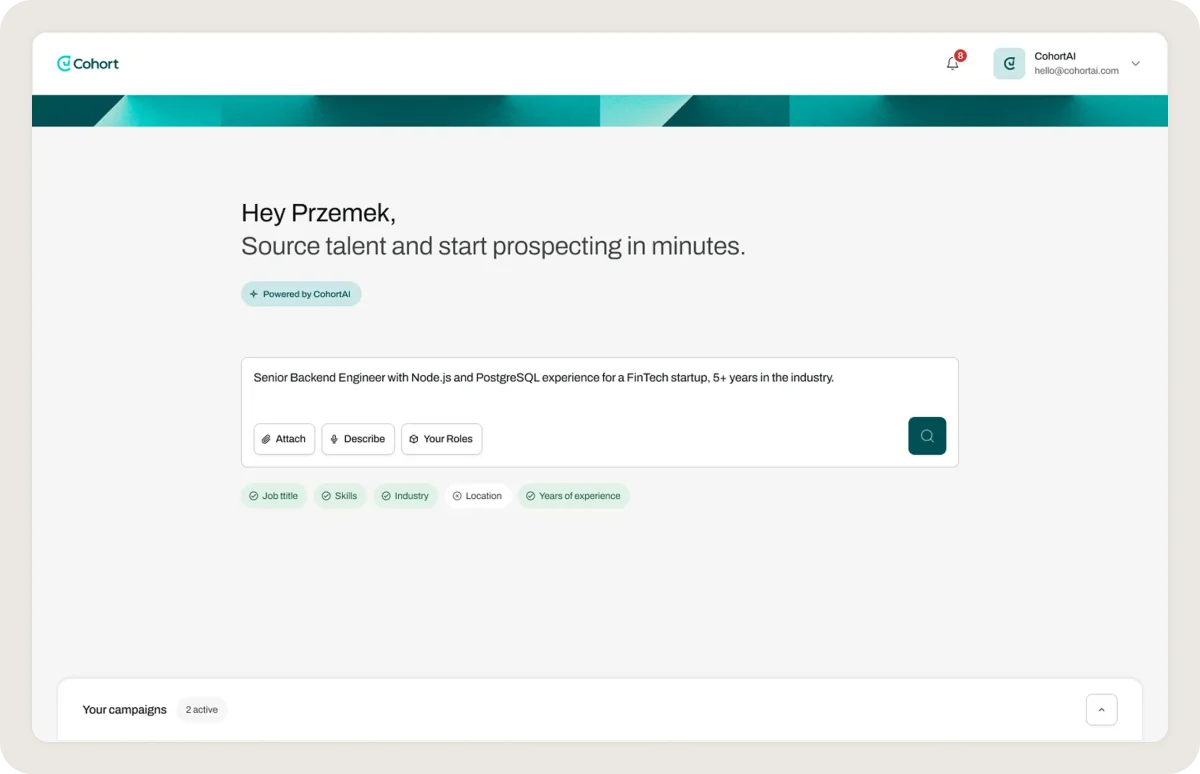

Cohort AI: Your perfect choice for reducing hiring bias

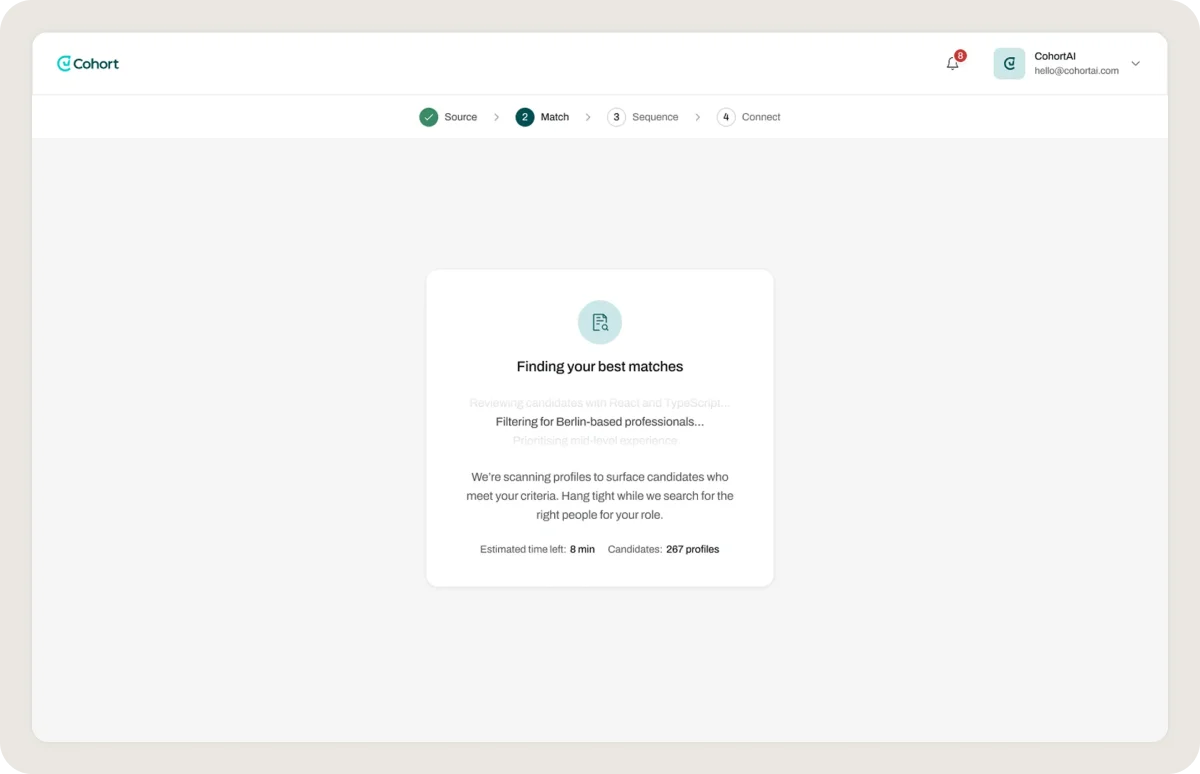

Cohort AI is a recruiting engine powered by specialized AI agents that streamline sourcing, screening, outreach, and candidate engagement.

Designed for speed and accuracy, it helps hiring teams prioritize skills, potential, and performance.

This way, they focus less on personal biases or resume backgrounds.

Here’s how we actively reduce bias in the hiring process:

- Bias-free sourcing: Sally the Scout scans platforms like GitHub, LinkedIn, and Stack Overflow. She finds strong candidates based on their skills and expertise. This occurs before candidates can be filtered out based on their name, background, or job history.

- Consistent, objective screening: Eva the Evaluator runs asynchronous, structured interviews tailored to each role and your company’s success criteria. Every candidate is evaluated on the same rubric, eliminating inconsistencies that fuel unconscious bias.

- Inclusive outreach at scale: Pete the Prospector sends AI-driven messages tailored to each candidate's profile and actions. This ensures that all candidates receive fair first-touch experiences, regardless of how they look or sound.

- Candidate engagement without bias: Charlie the Closer ensures clear and inclusive communication during the offer stages. This approach reduces drop-offs and builds trust with candidates from different backgrounds.

- Smarter matching, not filtering: The Talent Graph + LLM Matching Layer goes beyond keyword matching. It helps find candidates with unique paths or different credentials. This way, your team can discover potential, not just prestige.

- Top 3, bias-aware shortlists: Within 10 working days, you get three pre-vetted, high-fit candidates selected through AI ranking and behavioral signals, not personal networks or intuition.

Book a free demo today! See how Cohort AI helps your team hire faster, smarter, and more fairly.